YOLOv8转换PyTorch模型为TensorRT(.engine)运行Numpy报错的解决办法

本文最后更新于 2024-09-13,当前距离文章发布(更新)已经超过365天,文章内容可能已经过时,请注意甄别。

起因:根据官方教程将PyTorch模型转换为了.engine的TensorRT格式以期提高加载和推理速度,在Windows10/RTX4060上没有任何问题,推理所需时间从20ms/frame降低到了8ms/frame,提升显著。

然而在Jetson Xavier NX上转换后,虽然转换过程没有报任何错误,但一旦想要导入模型来使用,就会报错:

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify','pose' or 'obb'.

Loading CCVision/train/yolov8n_epochs500_batch50/weights/best.engine for TensorRT inference...

[05/11/2024-18:23:11] [TRT] [I] Loaded engine size: 17 MiB

[05/11/2024-18:23:15] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +342, GPU +323, now: CPU 700, GPU 3714 (MiB)

[05/11/2024-18:23:15] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +22, now: CPU 0, GPU 22 (MiB)

[05/11/2024-18:23:15] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +0, GPU +0, now: CPU 683, GPU 3714 (MiB)

[05/11/2024-18:23:15] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +596, now: CPU 0, GPU 618 (MiB)

/usr/lib/python3.8/dist-packages/tensorrt/__init__.py:166: FutureWarning: In the future `np.bool` will be defined as the corresponding NumPy scalar.

bool: np.bool,

Traceback (most recent call last):

File "yolodetect.py", line 10, in <module>

results = model(stream=True, source=0, show=True)

File "/home/jetson/.local/lib/python3.8/site-packages/ultralytics/engine/model.py", line 176, in __call__

return self.predict(source, stream, **kwargs)

File "/home/jetson/.local/lib/python3.8/site-packages/ultralytics/engine/model.py", line 445, in predict

self.predictor.setup_model(model=self.model, verbose=is_cli)

File "/home/jetson/.local/lib/python3.8/site-packages/ultralytics/engine/predictor.py", line 297, in setup_model

self.model = AutoBackend(

File "/home/jetson/.local/lib/python3.8/site-packages/torch/utils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "/home/jetson/.local/lib/python3.8/site-packages/ultralytics/nn/autobackend.py", line 273, in __init__

dtype = trt.nptype(model.get_binding_dtype(i))

File "/usr/lib/python3.8/dist-packages/tensorrt/__init__.py", line 166, in nptype

bool: np.bool,

File "/home/jetson/.local/lib/python3.8/site-packages/numpy/__init__.py", line 305, in __getattr__

raise AttributeError(__former_attrs__[attr])

AttributeError: module 'numpy' has no attribute 'bool'.

`np.bool` was a deprecated alias for the builtin `bool`. To avoid this error in existing code, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

The aliases was originally deprecated in NumPy 1.20; for more details and guidance see the original release note at:

https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

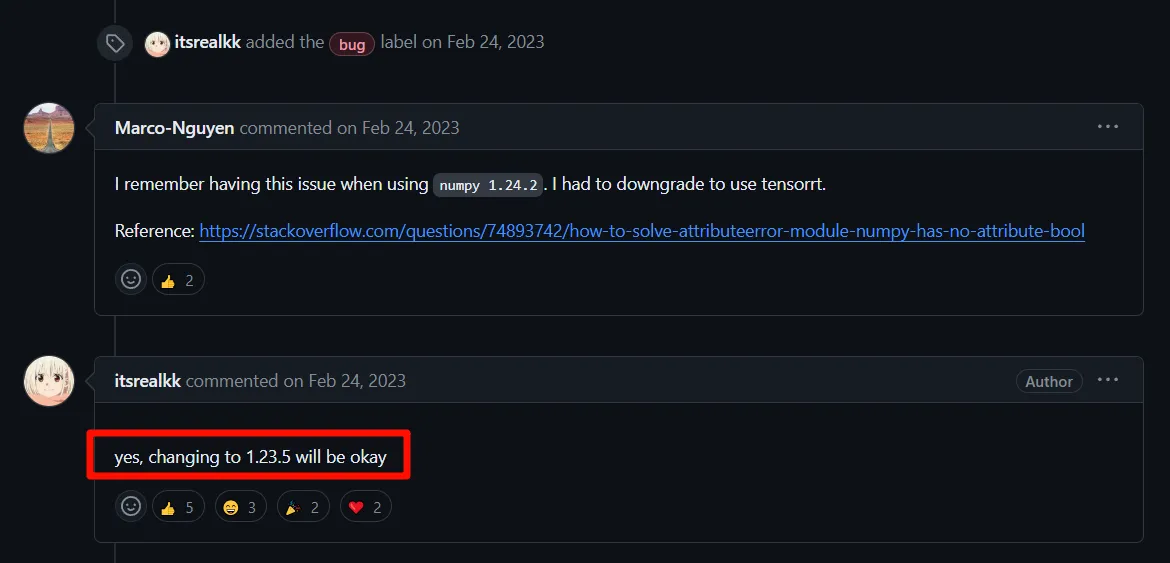

搜索了一下,Github上也有人遇到这个问题,共同点是都是Ubuntu系统:

降级到1.23.5确实可以解决这个问题,但pip也警告:

jetson@ubuntu:~/RoboContest/#CyberCartNX$ pip install numpy==1.23.5

Defaulting to user installation because normal site-packages is not writeable

Looking in indexes: https://mirror.sjtu.edu.cn/pypi/web/simple

Collecting numpy==1.23.5

Downloading https://mirror.sjtu.edu.cn/pypi-packages/bf/d1/1017fe3f5d65c4fe054a793f18f940d913868bb2846a02d3f6244a829a30/numpy-1.23.5-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (14.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 14.0/14.0 MB 4.3 MB/s eta 0:00:00

Installing collected packages: numpy

Attempting uninstall: numpy

Found existing installation: numpy 1.19.5

Uninstalling numpy-1.19.5:

Successfully uninstalled numpy-1.19.5

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

onnxruntime-gpu 1.17.0 requires numpy>=1.24.4, but you have numpy 1.23.5 which is incompatible.

Successfully installed numpy-1.23.5也就是说,1.23.5对于某些包来说还是偏低的……但至少模型可以加载并推理了。

问题暂时解决,如果后续还有别的问题再更新。

- 感谢你赐予我前进的力量

赞赏者名单

因为你们的支持让我意识到写文章的价值🙏

本文是原创文章,采用 CC BY-NC-SA 4.0 协议,您可以在不商用的情况下,免费转载或修改本文内容,完整转载请注明来自 Patrick's Blog

评论

隐私政策

你无需删除空行,直接评论以获取最佳展示效果